I want to execute a PowerShell Script within an SSIS package, but there is no PowerShell task. How do I do that in SSIS?

Solution

There are 3 possible solutions within SSIS:

- Using a Custom Task

- Using the Execute Process Task

- Using the Script Task

For this example I will use a simple PowerShell script that exports a list of installed hotfixes on the server to a CSV file, but the possibilities with PowerShell are endless.

#PowerShell: c:\temp\hotfixes.ps1

[CmdletBinding()]

Param(

# FilePathCsv parameter is required

[Parameter(Mandatory=$True,Position=1)]

[string]$FilePathCsv

)

# Create folder if it doesn't exists

$FolderPath = split-path $FilePathCsv

# Check if folder exists

if (-Not (Test-Path $FolderPath))

{

Write-Host "Creating folder $FolderPath"

New-Item -ItemType directory -Path $FolderPath

}

# Export list of hotfixes to CSV file

Get-WmiObject -Class Win32_QuickFixEngineering -ComputerName .| Export-Csv $FilePathCsv

The script has a parameter to supply a csv filepath. It checks whether the folder mentioned in this path exists. If not it creates it. Then it exports the data to specified CSV file.

A) Using a Custom Task

You could look for a custom task like the one on codeplex (also see this example), but it seems not to be an active project. And so far I haven't found any commercial PowerShell Tasks for SSIS.

B) Using the Execute Process Task

To execute the PowerShell script with an Execute Process Task you need a command that looks like:

powershell.exe -command "c:\temp\hotfixes.ps1 -FilePathCsv c:\temp\export\hotfixes.csv"

Add an Execute Process Task to the Control Flow and give it a suitable name like "EPR - Create hotfixes CSV". Edit it and go to the Process page. In the Excutable property you add the complete path of the PowerShell executable. For example: C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe

And in the Arguments property you add the rest of the command: -command "c:\temp\hotfixes.ps1 -FilePathCsv c:\temp\export\hotfixes.csv"

|

| PowerShell via Execute Process Task |

This step is optional but with two SSIS string variables (or parameters) containing the paths and an expression on the arguments property you can make it a little more flexible: "-command \"" + @[User::PathPowerShellScript] + " -FilePathCsv " + @[User::PathHotfixes] + "\""

|

| Process Task with variables(expressions) in the arguments |

When you run this in Visual Studio you will see a PowerShell window for a couple of seconds. With the StandardOutputVariable and StandardErrorVariable you can catch the output of the PowerShell script into an SSIS variable.

|

| PowerShell window when executing the Execute Process Task |

C) Using the Script Task

If you don't like the Execute Process Task then you could use the Script Task to execute a PowerShell script, but to use PowerShell in .NET you need to install the Windows Software Development Kit (SDK) for Windows. It contains the assembly System.Management.Automation.dll which we need to reference in our Script Task later on. Don't forget to install it on all your machines (DTAP: Development, Test, Acceptance and Production).

|

| Choose Windows Software Development Kit for the libraries |

First add two string variables (or parameters) to your package. PathHotfixes containts the filepath of the to be created CSV file and PathPowerShellScript contains the filepath of the PowerShell file.

| String variables |

Add the Script Task to the Control Flow and give it a suitable name like "SCR - Create hotfixes CSV". Then edit it choose the Script Language (C# or VB.NET).

|

| Choose ScriptLanguage C# or VB |

Then add the two string variables (or parameters) as Read Only Variables. We are using them to avoid hardcoded paths in our script.

|

| ReadOnlyVariables |

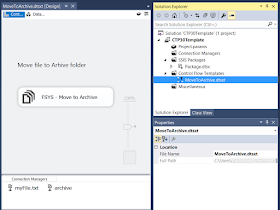

After that hit the Edit Script button to open the VSTA environment. In the Solution Explorer (upper right corner) right click the References and choose Add Reference... then browse to C:\Program Files (x86)\Reference Assemblies\Microsoft\WindowsPowerShell\3.0 and select the System.Management.Automation.dll file.

|

| Add Reference |

In the code we need to add extra namespaces (usings in C# and imports VB) to shorten the code. You can either add these rows to the existing namespaces by adding them in the region Namespaces. Or you can add a separate region for custom namespaces.

#region Custom Namespaces using System.Management.Automation; using System.Management.Automation.Runspaces; #endregion

or VB.NET

#Region "Custom Namespaces" Imports System.Management.Automation Imports System.Management.Automation.Runspaces #End Region

Next go to the main method and add the following code

// C# code ////// This method executes a PowerShell file with a parameter /// public void Main() { // Create a new Runspace to run your command in RunspaceConfiguration runspaceConfiguration = RunspaceConfiguration.Create(); Runspace runspace = RunspaceFactory.CreateRunspace(runspaceConfiguration); runspace.Open(); // Create a new command with a parameter Command command = new Command(Dts.Variables["User::PathPowerShellScript"].Value.ToString()); CommandParameter commandParameter = new CommandParameter("FilePathCsv", Dts.Variables["User::PathHotfixes"].Value.ToString()); command.Parameters.Add(commandParameter); // Execute the PowerShell script in the Runspace Pipeline pipeline = runspace.CreatePipeline(); pipeline.Commands.Add(command); pipeline.Invoke(); // Close the Script Task and return Success Dts.TaskResult = (int)ScriptResults.Success; }

or VB.NET

' VB.NET code

' This method executes a PowerShell file with a parameter

Public Sub Main()

' Create a new Runspace to run your command in

Dim runspaceConfiguration As RunspaceConfiguration = RunspaceConfiguration.Create()

Dim runspace As Runspace = RunspaceFactory.CreateRunspace(runspaceConfiguration)

runspace.Open()

' Create a new command with a parameter

Dim command As New Command(Dts.Variables("User::PathPowerShellScript").Value.ToString())

Dim commandParameter As New CommandParameter("FilePathCsv", Dts.Variables("User::PathHotfixes").Value.ToString())

command.Parameters.Add(commandParameter)

' Execute the PowerShell script in the Runspace

Dim pipeline As Pipeline = runspace.CreatePipeline()

pipeline.Commands.Add(command)

pipeline.Invoke()

' Close the Script Task and return Success

Dts.TaskResult = ScriptResults.Success

End Sub

Alternative: If you don't want to store the PowerShell code in a separate file because it makes it harder to deploy it through the DTAP environment then you can also add the PowerShell code directly in your .NET code or store it in an SSIS variable and pass that to the Script Task. In that case the code slightly changes.

// C# code ////// This method executes a PowerShell script /// public void Main() { // Create a new Runspace to run your script in RunspaceConfiguration runspaceConfiguration = RunspaceConfiguration.Create(); Runspace runspace = RunspaceFactory.CreateRunspace(runspaceConfiguration); runspace.Open(); // Create string with PowerShell code (or get it from an SSIS variable) string PowerShellScript = @"$FilePathCsv = ""XXX"" # Create folder if it doesn't exists $FolderPath = split-path $FilePathCsv # Check if folder exists if (-Not (Test-Path $FolderPath)) { Write-Host ""Creating folder $FolderPath"" New-Item -ItemType directory -Path $FolderPath } # Export list of hotfixes to CSV file Get-WmiObject -Class Win32_QuickFixEngineering -ComputerName .| Export-Csv $FilePathCsv"; // Replace the hardcode dummy path with a value from an SSIS variable string exportFile = Dts.Variables["User::PathHotfixes"].Value.ToString(); PowerShellScript = PowerShellScript.Replace("XXX", exportFile); // Execute the PowerShell script in the Runspace Pipeline pipeline = runspace.CreatePipeline(); pipeline.Commands.AddScript(PowerShellScript); pipeline.Invoke(); // Close the Script Task and return Success Dts.TaskResult = (int)ScriptResults.Success; }

or VB.NET (less readable due the lack of a good string continuation on multiple lines in VB)

' VB.NET code

' This method executes a PowerShell script

Public Sub Main()

' Create a new Runspace to run your script in

Dim runspaceConfiguration As RunspaceConfiguration = RunspaceConfiguration.Create()

Dim runspace As Runspace = RunspaceFactory.CreateRunspace(runspaceConfiguration)

runspace.Open()

' Create string with PowerShell code (or get it from an SSIS variable)

Dim PowerShellScript As String = "$FilePathCsv = ""XXX""" & Environment.NewLine &

"# Create folder if it doesn't exists" & Environment.NewLine &

"$FolderPath = split-path $FilePathCsv" & Environment.NewLine &

"# Check if folder exists" & Environment.NewLine &

"if (-Not (Test-Path $FolderPath))" & Environment.NewLine &

"{" & Environment.NewLine &

" Write-Host ""Creating folder $FolderPath""" & Environment.NewLine &

" New-Item -ItemType directory -Path $FolderPath" & Environment.NewLine &

"}" & Environment.NewLine &

"# Export list of hotfixes to CSV file" & Environment.NewLine &

"Get-WmiObject -Class Win32_QuickFixEngineering -ComputerName .| Export-Csv $FilePathCsv"

' Replace the hardcode dummy path with a value from an SSIS variable

Dim exportFile As String = Dts.Variables("User::PathHotfixes").Value.ToString()

PowerShellScript = PowerShellScript.Replace("XXX", exportFile)

' Execute the PowerShell script in the Runspace

Dim pipeline As Pipeline = runspace.CreatePipeline()

pipeline.Commands.AddScript(PowerShellScript)

pipeline.Invoke()

' Close the Script Task and return Success

Dts.TaskResult = ScriptResults.Success

End Sub

After that close the VSTA editor and the Script Task Editor and test the Script Task. If it was successful you can use a Data Flow Task to read the CSV file. With this relative easy code and this custom task example you could create your own custom PowerShell task.