Azure Data Factory (V2) now supports running SSIS packages in an Integration Runtime, but you are charged by the hour. How can I automatically pause (and resume) my SSIS environment in Azure to save some money on my Azure bill?

|

| Pause your Integration Runtime in the portal |

Solution

For this example I'm using an Integration Runtime (IR) that runs an SSIS package each hour during working hours. After working hour it is no longer necessary to refresh the data warehouse. Therefore the IR could be suspended to save money. I will also suspend the trigger that runs the pipeline (package) each hour to prevent errors. For this solution I will use a PowerShell script that runs in an Azure Automation Runbook.

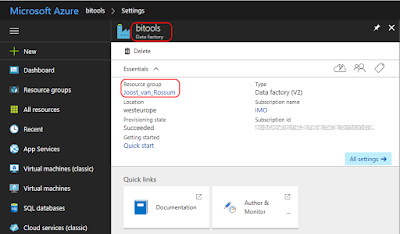

1) Collect parameters

Before we start coding we first we need to get the name of the Azure Data Factory and its Resource group.

|

| Your Azure Data Factory (V2) |

If you also want to disable the trigger then we need its name. This is probably only necessary if you are running hourly and didn't create separate triggers for each hour. You can find it by clicking on Author & Monitor under Quick links.

|

| Your trigger |

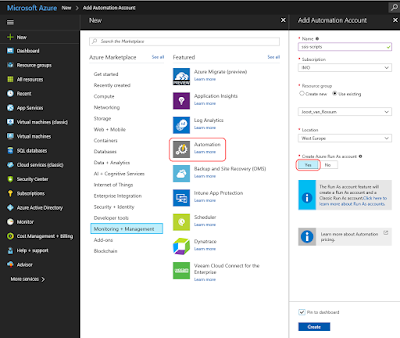

2) Azure Automation Account

Create an Azure Automation Account. You can find it under + New, Monitoring + Management. Make sure that Create Azure Run As account is turned on.

|

| Azure Automation Account |

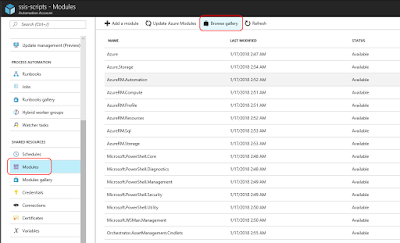

3) Import Module

We need to tell our code about Integration Runtimes in Azure Data Factory. You do this by adding a modules. Scroll down in the menu of the Automation Account and click on Module. Now you see all installed modules. We need to add the module called AzureRM.DataFactoryV2, however it depends on AzureRM.Profile (≥ 4.2.0). Click on Browse gallery and search for AzureRM.Profile and import it and then repeat it for AzureRM.DataFactoryV2. Make sure to add the V2 version!

|

| Import modules |

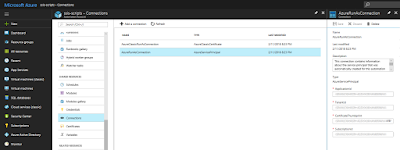

4) Connections

This step is for your information only and to understand the code. Under Connections you will find a default connection named 'AzureRunAsConnection' that contains information about the Azure environment, like the tendant id and the subscription id. To prevent hardcoded connection details we will retrieve some of these fields in the PowerShell code.

|

| AzureRunAsConnection |

5) Runbooks

Now it is time to add a new Azure Runbook for the PowerShell code. Click on Runbooks and then add a new runbook (There are also five example runbooks of which AzureAutomationTutorialScript could be useful as an example). Give your new Runbook a suitable name and choose PowerShell as type. There will be two separate runbooks/scripts: one for pause and one for resume. When finished with the Pause script you need to repeat this for the Resume script.

|

| Create new Rubook |

6) Edit Script

After clicking Create in the previous step the editor will we opened. When editing an existing Runbook you need to click on the Edit button to edit the code. You can copy and paste the code of one of these scripts below to your editor. Study the green comments to understand the code. Also make sure to fill in the right value for the variables (see parameters).

The first script is the pause script and the second script is the resume script. You could merge both scripts and use an if statement on the status property to either pause or resume, but I prefer two separate scripts both with their own schedule.

# This scripts pauses your Integration Runtime (and its trigger) if it is running

# Parameters

$ConnectionName = 'AzureRunAsConnection'

$DataFactoryName = 'ADF-SSISJoost'

$ResourceGroup = 'joost_van_rossum'

$TriggerName = 'Hourly'

# Do not continue after an error

$ErrorActionPreference = "Stop"

########################################################

# Log in to Azure (standard code)

########################################################

Write-Verbose -Message 'Connecting to Azure'

try

{

# Get the connection "AzureRunAsConnection "

$ServicePrincipalConnection = Get-AutomationConnection -Name $ConnectionName

'Log in to Azure...'

$null = Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $ServicePrincipalConnection.TenantId `

-ApplicationId $ServicePrincipalConnection.ApplicationId `

-CertificateThumbprint $ServicePrincipalConnection.CertificateThumbprint

}

catch

{

if (!$ServicePrincipalConnection)

{

# You forgot to turn on 'Create Azure Run As account'

$ErrorMessage = "Connection $ConnectionName not found."

throw $ErrorMessage

}

else

{

# Something went wrong

Write-Error -Message $_.Exception.Message

throw $_.Exception

}

}

########################################################

# Get Integration Runtime in Azure Data Factory

$IntegrationRuntime = Get-AzureRmDataFactoryV2IntegrationRuntime `

-DataFactoryName $DataFactoryName `

-ResourceGroupName $ResourceGroup

# Check if Integration Runtime was found

if (!$IntegrationRuntime)

{

# Your ADF does not have a Integration Runtime

# or the ADF does not exist

$ErrorMessage = "No Integration Runtime found in ADF $($DataFactoryName)."

throw $ErrorMessage

}

# Check if the Integration Runtime is running

elseif ($IntegrationRuntime.State -eq "Started")

{

<# Start Trigger Deactivation #>

# Getting trigger to check if it exists

$Trigger = Get-AzureRmDataFactoryV2Trigger `

-DataFactoryName $DataFactoryName `

-Name $TriggerName `

-ResourceGroupName $ResourceGroup

# Check if the trigger was found

if (!$Trigger)

{

# Fail options:

# The ADF does not exist (typo)

# The trigger does not exist (typo)

$ErrorMessage = "Trigger $($TriggerName) not found."

throw $ErrorMessage

}

# Check if the trigger is activated

elseif ($Trigger.RuntimeState -eq "Started")

{

Write-Output "Stopping Trigger $($TriggerName)"

$null = Stop-AzureRmDataFactoryV2Trigger `

-DataFactoryName $DataFactoryName `

-Name $TriggerName `

-ResourceGroupName $ResourceGroup `

-Force

}

else

{

# Write message to screen (not throwing error)

Write-Output "Trigger $($TriggerName) is not activated."

}

<# End Trigger Deactivation #>

# Stop the integration runtime

Write-Output "Pausing Integration Runtime $($IntegrationRuntime.Name)."

$null = Stop-AzureRmDataFactoryV2IntegrationRuntime `

-DataFactoryName $IntegrationRuntime.DataFactoryName `

-Name $IntegrationRuntime.Name `

-ResourceGroupName $IntegrationRuntime.ResourceGroupName `

-Force

Write-Output "Done"

}

else

{

# Write message to screen (not throwing error)

Write-Output "Integration Runtime $($IntegrationRuntime.Name) is not running."

}

# This scripts resumes your Integration Runtime (and its trigger) if it is stopped

# Parameters

$ConnectionName = 'AzureRunAsConnection'

$DataFactoryName = 'ADF-SSISJoost'

$ResourceGroup = 'joost_van_rossum'

$TriggerName = 'Hourly'

# Do not continue after an error

$ErrorActionPreference = "Stop"

########################################################

# Log in to Azure (standard code)

########################################################

Write-Verbose -Message 'Connecting to Azure'

try

{

# Get the connection "AzureRunAsConnection "

$ServicePrincipalConnection = Get-AutomationConnection -Name $ConnectionName

'Log in to Azure...'

$null = Add-AzureRmAccount `

-ServicePrincipal `

-TenantId $ServicePrincipalConnection.TenantId `

-ApplicationId $ServicePrincipalConnection.ApplicationId `

-CertificateThumbprint $ServicePrincipalConnection.CertificateThumbprint

}

catch

{

if (!$ServicePrincipalConnection)

{

# You forgot to turn on 'Create Azure Run As account'

$ErrorMessage = "Connection $ConnectionName not found."

throw $ErrorMessage

}

else

{

# Something went wrong

Write-Error -Message $_.Exception.Message

throw $_.Exception

}

}

########################################################

# Get Integration Runtime in Azure Data Factory

$IntegrationRuntime = Get-AzureRmDataFactoryV2IntegrationRuntime `

-DataFactoryName $DataFactoryName `

-ResourceGroupName $ResourceGroup

# Check if Integration Runtime was found

if (!$IntegrationRuntime)

{

# Your ADF does not have a Integration Runtime

# or the ADF does not exist

$ErrorMessage = "No Integration Runtime found in ADF $($DataFactoryName)."

throw $ErrorMessage

}

# Check if the Integration Runtime is running

elseif ($IntegrationRuntime.State -ne "Started")

{

# Resume the integration runtime

Write-Output "Resuming Integration Runtime $($IntegrationRuntime.Name)."

$null = Start-AzureRmDataFactoryV2IntegrationRuntime `

-DataFactoryName $IntegrationRuntime.DataFactoryName `

-Name $IntegrationRuntime.Name `

-ResourceGroupName $IntegrationRuntime.ResourceGroupName `

-Force

Write-Output "Done"

}

else

{

# Write message to screen (not throwing error)

Write-Output "Integration Runtime $($IntegrationRuntime.Name) is already running."

}

<# Start Trigger Activation #>

# Getting trigger to check if it exists

$Trigger = Get-AzureRmDataFactoryV2Trigger `

-DataFactoryName $DataFactoryName `

-Name $TriggerName `

-ResourceGroupName $ResourceGroup

# Check if the trigger was found

if (!$Trigger)

{

# Fail options:

# The ADF does not exist (typo)

# The trigger does not exist (typo)

$ErrorMessage = "Trigger $($TriggerName) not found."

throw $ErrorMessage

}

# Check if the trigger is activated

elseif ($Trigger.RuntimeState -ne "Started")

{

Write-Output "Resuming Trigger $($TriggerName)"

$null = Start-AzureRmDataFactoryV2Trigger `

-DataFactoryName $DataFactoryName `

-Name $TriggerName `

-ResourceGroupName $ResourceGroup `

-Force

}

else

{

# Write message to screen (not throwing error)

Write-Output "Trigger $($TriggerName) is already activated."

}

<# End Trigger Deactivation #>

Note: when you don't want to disable your trigger then remove the lines between <# Start Trigger Deactivation #> and <# End Trigger Deactivation #>.

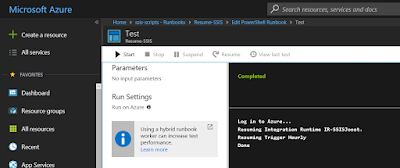

7) Testing

You can use the Test Pane menu option in the editor to test your PowerShell scripts. When clicking on Run it will first Queue the script before Starting it. Running takes a couple of minutes.

|

| Pausing Integration Runtime |

|

| Resuming Integration Runtime (20 minutes+) |

8) Publish

When your script is ready, it is time to publish it. Above the editor click on the Publish button. Confirm overriding any previously published versions.

|

| Publish your script |

9) Schedule

And now that we have a working and published Azure Runbook, we need to schedule it. Click on Schedule to create a new schedule for your runbook. My packages run each hour during working hours. So for the resume script I created a schedule that runs every working day on 7:00AM. The pause script could for example be scheduled on working days at 9:00PM (21:00).

Now you need to hit the refresh button in the Azure Data Factory dashboard to see if it really works. It takes a few minutes to run, so don't worry too soon.

|

| Add schedule |

Summary

In this post you saw how you can pause and resume your Integration Runtime in ADF to save some money on your Azure bill during the quiet hours. As said before pausing and resuming the trigger is optional. When creating the schedule, keep in mind that resuming/starting takes around 20 minutes to finish and note that you also pay during this startup phase.

Steps 5 to 9 need to be repeated for the resume script after you finished the pause script.

Update: you can also do a pause and resume in the pipeline itself if you only have one ETL job.

Update 2: now even easier done via Rest API