Case

I have a slowly changing dimension type II (with a start and end date). How do I create a working lookup in my dataflow?

Solution

There are roughly three options:

A) Override lookup query with custom query

B) Split dimension in separate days with datetime dimension

C) Split dimension in separate days in second table

Let’s say we have a fact for employee number 123456789 with fact date 12 December 2010 and we want to know the right EmployeeId.

Sample of Dim_Employee table:

| EmpoyeeId | EmpoyeeNumber | Firstname | LastName | Department | Startdate | Enddate |

|---|---|---|---|---|---|---|

| 1 | 123456789 | John | Gilbert | DepartmentX | 2004-01-01 | 2005-12-31 |

| 12 | 123456789 | John | Gilbert | DepartmentZ | 2006-01-01 | 2010-12-31 |

| 19 | 123456789 | John | Gilbert | DepartmentA | 2011-01-01 | 9999-12-31 |

Sample of the dataflow:

|

| Partial dataflow |

The lookup should return 12 as EmployeeId for this example.

Solution A

Override lookup query with custom query

A1) Query

Let’s have a closer look at the lookup query. Select the EmpoyeeId, EmpoyeeNumber, Startdate and Enddate from the employee dimension table (don't just select the whole table: see Performance Best Practices).

|

| Lookup Query |

A2) Select columns

Select the input columns to map the lookup columns. Now you see the problem: The factdate can either map the Startdate or Enddate. There is no between here. For now map the EmployeeNumber and the Factdate according to the sample and select EmployeeId as a new Column.

|

| Column Mappings |

A3) Custom Query

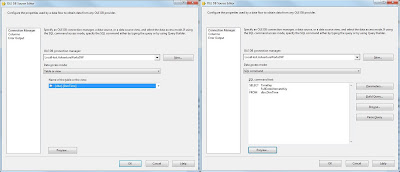

This step is a little different for SSIS 2005 and SSIS 2008 (and newer). Goto the Advanced Tab.

SSIS 2008:

In SSIS 2008 this tab is completely disabled with the message: This page is not used when Full cache mode is selected. To overcome this goto to the General tab and select Partial cache instead of Full Cache. Now you can modify the SQL Statement with the following query:

--Query with parameters

SELECT *

FROM (SELECT EmployeeId

, EmployeeNumber

, StartDate

, EndDate

FROM Dim_Employee) [refTable]

WHERE [refTable].[EmployeeNumber] = ?

AND [refTable].[StartDate] <= ?

AND [refTable].[EndDate] > ?

|

| Modify SQL Statement in SSIS 2008 |

SSIS 2005

For SSIS 2005: just Enable memory restriction and enter the query.

|

| Modify SQL Statement in SSIS 2005 |

A4) Parameters

Now Push the Parameters button on the advanced tab to enter the mappings. Select FactDate (the date column in the source table) for both Parameter1 and Parameter2.

|

| Parameters |

A5) Result

Now your lookup is ready for testing.

|

| The result: EmployeeId 12 |

The big big downside for this method is the lack of caching. You cannot use full cache. It will work for a small number records, but when the numbers grow, it will completely slow down your dataflow.

Solution B

Split dimension in separate days with datetime dimension

This solution is only possible if you have a time dimension.

B1) Query

Join the employee dimension and the time dimension, using between logic in the ON clause. This will result in a row for every dimension member for each day.

--Query with join

SELECT Dim_Employee.EmployeeId

, Dim_Employee.EmployeeNumber

, Dim_Time.Date

FROM Dim_Employee

INNER JOIN Dim_Time

ON Dim_Time.Date

BETWEEN Dim_Employee.StartDate

AND Dim_Employee.EndDate

|

| The new query, join with the time dimension |

B2) Select columns

Select the input columns to map the lookup columns. Unlike option A, the mapping is easy.

|

| Column mapping |

B3) Result

Now you can test the dataflow and see that the result is simulair to Solution A. But we have to narrow down the number of records to improve the performance, because there are over 2500 records for this one employee.

There are a couple of options. If your source data contain only records for the the current year you could change the query to:

--Only current year

SELECT Dim_Employee.EmployeeId

, Dim_Employee.EmployeeNumber

, Dim_Time.Date

FROM Dim_Employee

INNER JOIN Dim_Time

ON Dim_Time.Date

BETWEEN Dim_Employee.StartDate

AND Dim_Employee.EndDate

WHERE YEAR(Dim_Time.Date) = YEAR(GETDATE())

Or you can use a MIN(date) query on your source data and use that in the where clause.

--Use minimum

SELECT Dim_Employee.EmployeeId

, Dim_Employee.EmployeeNumber

, Dim_Time.Date

FROM Dim_Employee

INNER JOIN Dim_Time

ON Dim_Time.Date

BETWEEN Dim_Employee.StartDate

AND Dim_Employee.EndDate

WHERE Dim_Time.Date >= (SELECT MIN(FactDate)

FROM YourStaginTable))

And there are probably some more advanced queries to narrow down the number of records.

Solution C

Split dimension in separate days in second table.

If you don't have a time dimension table, you use a script (or query) to split all dimensions in separate days and copy those to a second table. Then use that second table in your lookup. And of cource try to narrow down the number of reconds just like in Solution B.

Conclusion

Every solution has it's pros and cons. The best solution for you depends on a number of things such as the number of records in your dimension and date spread in the fact records. Test it! Let me know if you found an other solution for your SCD Type II dimension lookup.

Alternatives without the Lookup Transformation

For large volumes of data there are a couple of alternatives:

1) Use a Merge Join Transformation instead of a lookup (join without the dates) and add a Conditional Split Transformation behind it that checks whether the date of the fact table is between the two dates of the dimension table.

2) The fasted option is to use a source query of an OleDB Source Component and do the between query of Solution A in SQL Server.

* Update *

Also see this Script Component solution from Matt Masson.